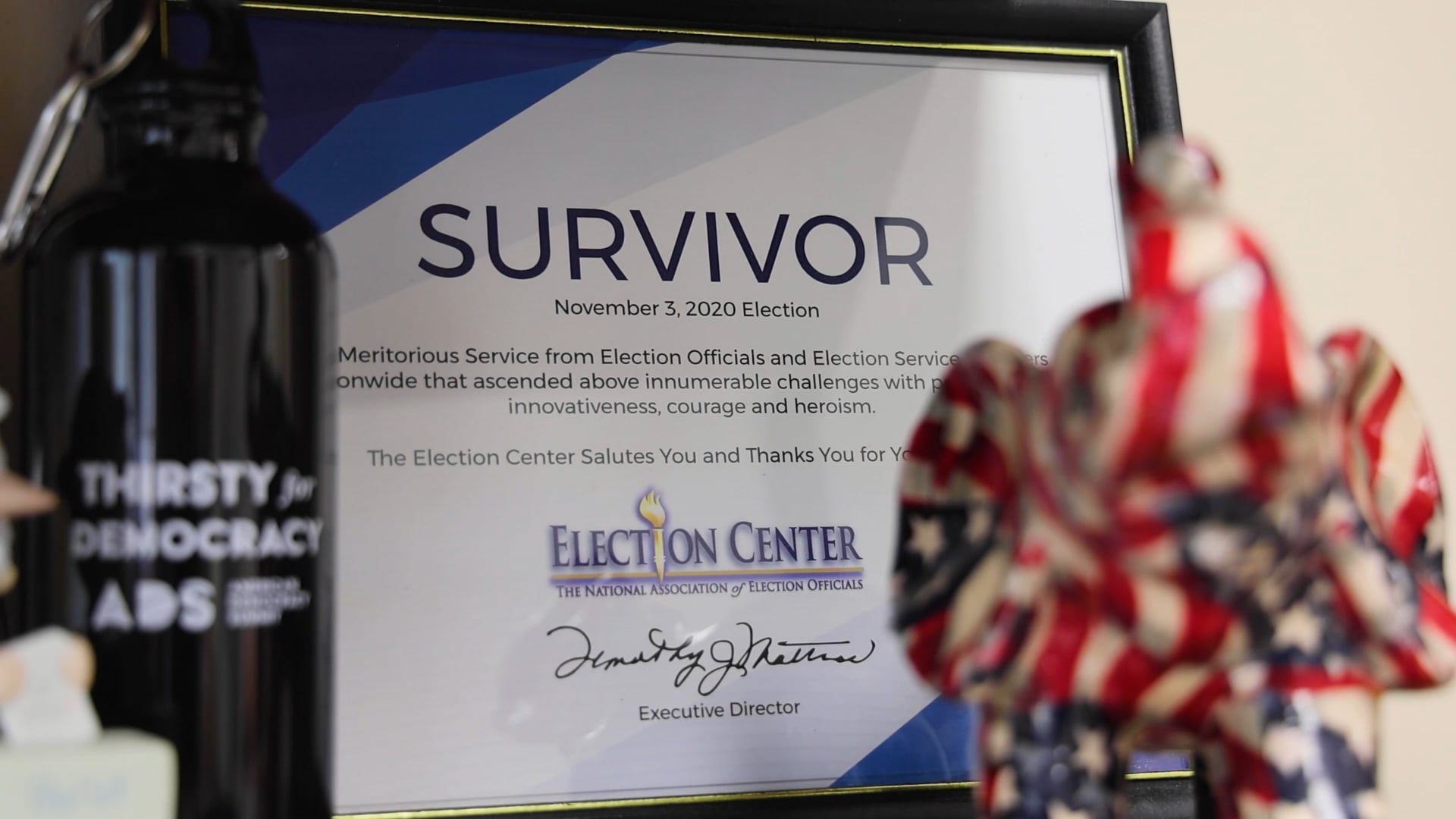

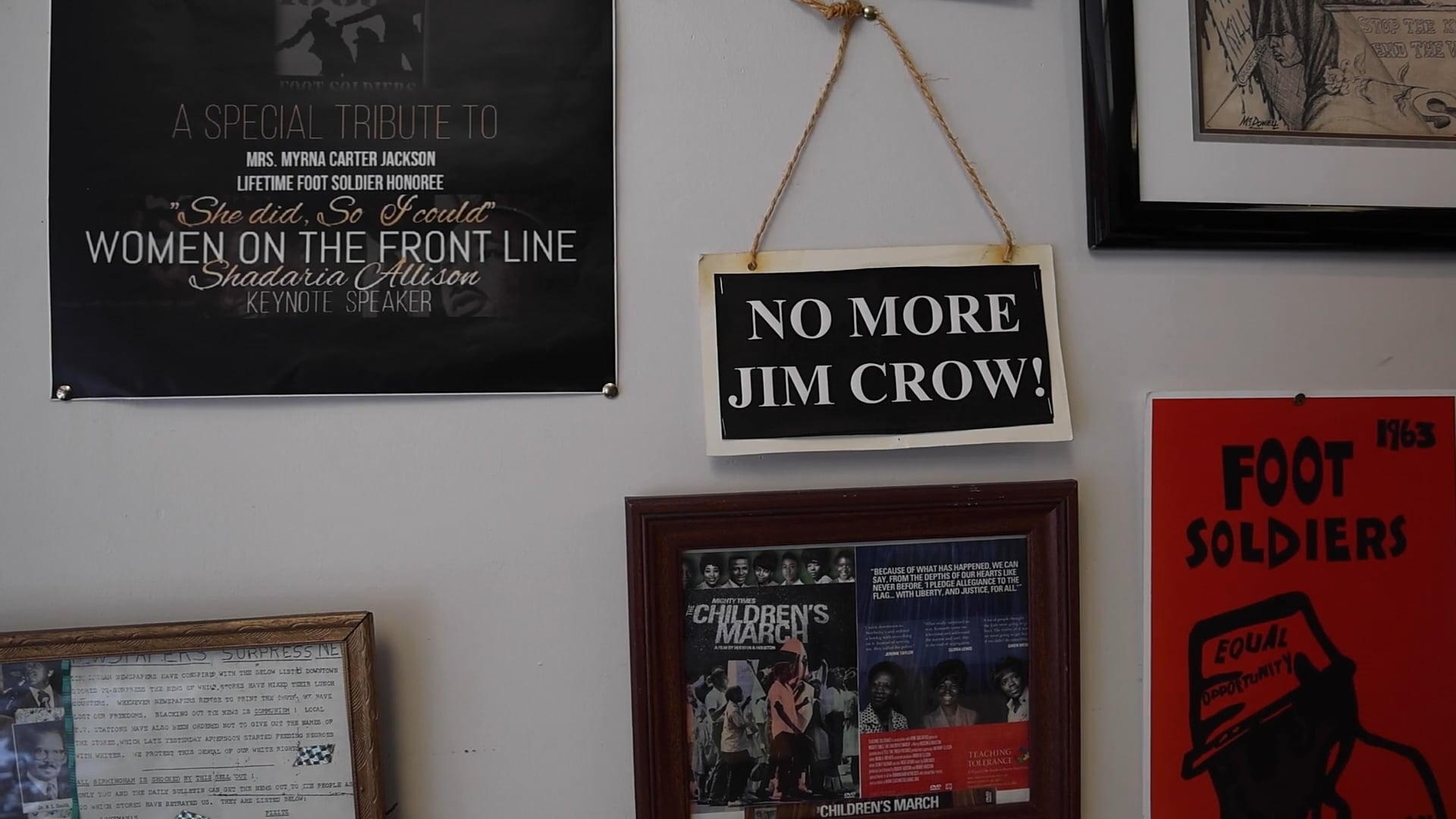

America is months away from an election unlike any other. Election denialism is increasing. Intimidation and threats toward election workers are no longer rare but rather the norm. Disinformation is rampant. What does it all say about the state of our democracy – come November and beyond? “Fractured,” a project by Carnegie-Knight News21, explores – and tries to answer – that very question.

View the official trailer:

(Video by Donovan Johnson/News21)